Langchain Tutorial

- Introduction to Langchain

- Setting Up Langchain and Groq

- MODEL I/O

- Generate Predictions

- Prompt Templates

- Getting Structured Output

- Building an AI Agent

- Other Langchain Features _ Retrieval Augmented Generation (RAG) _ Memory Module

Introduction to Langchain

Langchain is an AI toolkit that lets you build complex application on top of LLMs, like chatGPT, DeepSeek, Claude.

You can use them to create templates:

prompt_template = PromptTemplate.from_template(

"List {n} cooking/meal titles for {cuisine} cuisine."

)And you can turn them into chains, which you can link together in a sequence:

complex_chain = SequentialChain(

chains=[chain1, chain2],

input_variables=["genre"],

output_variables=["synopsis", "titles"],

verbose=True,

)

output = complex_chain({"genre": "comedy"})

print(f"Output: {output}")You can also use it to create agents with context awareness and reasoning ability.

Setting Up Langchain and Groq

- Install

langchainandlangchain-groqlibraries:

pip install langchain langchain-groqWe’ll use Groq API as our free alternative to OpenAI, Claude or others. For that install

Hugging Facelibrary.

Getting API Access

- Create a free account on Groq

- Go to API Keys and create an API token

- Save this token securely - we’ll need it for authentication

- Set your API token into an environment variable:

export GROQ_API_KEY='your-api-key-here'or add it in the code (also import the os library):

os.environ["GROQ_API_KEY"] = "your_groq_api_token"Once everything is installed, we are ready to start using LangChain.

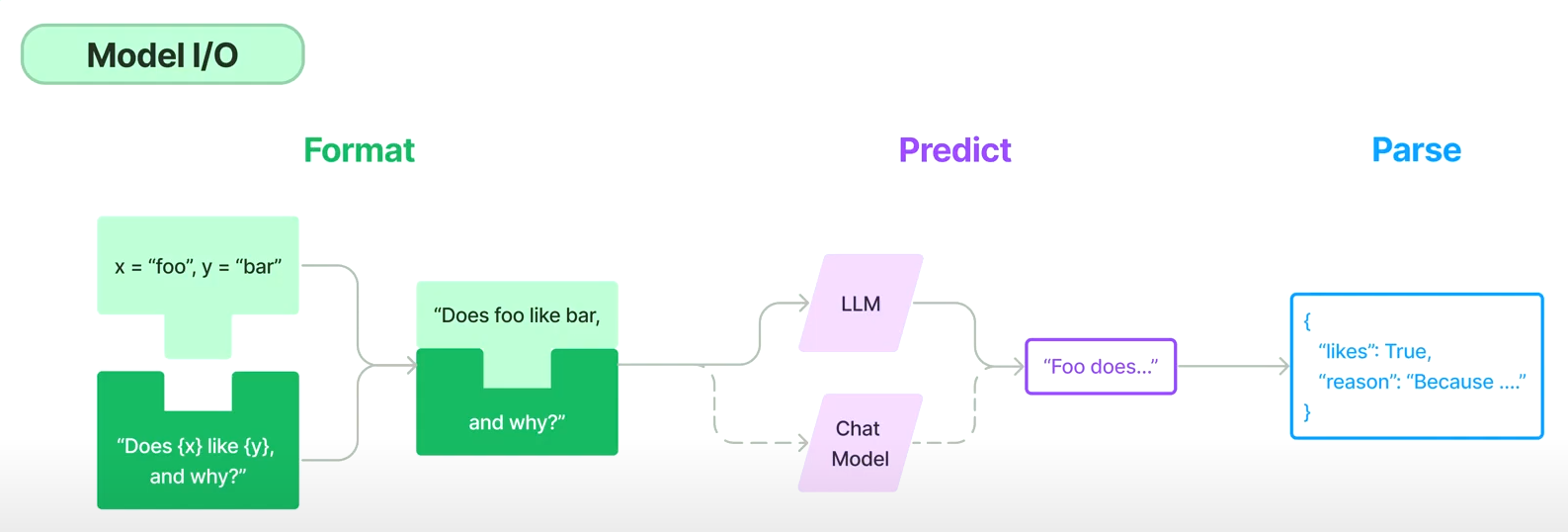

MODEL I/O

In this section, we will understand how it all works. You can think of Langchain as a sort of wrapper around LLMs:

- It abstracts away the implementation details,

- and it gives us a more useful way to interact with it.

To interact with an LLM:

- The first step is the prompt. Langchain gives us a way to format a prompt with variables.

- Next, it sends your prompt as an input to the LLM, and this can be any LLM of your choice

- After sending the prompt, you will get a string output. This is called the predict phase.

- Sometimes, the string output isn’t always in the right format that you want, so you can add a

parserat the end of the sequence, and it will figure out how to best format this text output so that it complies with your output model.

Generate Predictions

- We start by importing the

langchain_groqlibrary:

from langchain_groq import ChatGroq- Then, we create an instance of the LLM:

# Initialize the Groq chat model

# We're using LLaMA 3 70B model, which is one of the latest available on Groq

llm = ChatGroq(

model="llama3-70b-8192",

temperature=0.3,

max_tokens=500,

)- To make a prediction, use the

invokemethod and then pass in the prompt as a string:

# Generate the response using LangChain's invoke method

response = llm.invoke("What are the 7 wonders of the world?")

print(f"Response: {response.content}")A better version of the above code that uses HumanMessage and SystemMessage:

from langchain_groq import ChatGroq from langchain.schema import HumanMessage, SystemMessage # Initialize the Groq chat model chat_model = ChatGroq( model="llama3-70b-8192", temperature=0.7, # Slightly higher temperature for more creative responses max_tokens=500, ) # Define the system message for pirate personality with emojis system_message = SystemMessage( content="You are a friendly pirate who loves to share knowledge. Always respond in pirate speech, use pirate slang, and include plenty of nautical references. Add relevant emojis throughout your responses to make them more engaging. Arr! ☠️🏴☠️" ) # Define the question question = "What are the 7 wonders of the world?" # Create messages with the system instruction and question messages = [ system_message, HumanMessage(content=question) ] # Get the response response = chat_model.invoke(messages) # Print the response print("\nQuestion:", question) print("\nPirate Response:") print(response.content)

Prompt Templates

Most of the time, if you are using LLMs for an app, you are going to want a prompt template that you can use to create multiple other prompts just by plugging in some values.

- Import the

PromptTemplate:

from langchain.prompts import PromptTemplate- In the following prompt template, we want to list n number of meal ideas for a particular type of cuisine:

# Create a prompt template for generating meal titles

prompt_template = PromptTemplate.from_template(

"List {n} cooking/meal titles for {cuisine} cuisine (name only)."

)- Then create a prompt from the template, you can use the

format methodand then provide values for the placeholder:

prompt = prompt_template.format(n=3, cuisine="italian")

repsonse = llm.invoke(prompt)This is very useful, if you want to build an app and only expose fields for your end user to fill in. And then you will have your prompt template do all the work.

- Here is a complete example code using the pipe operator (

|) syntax to create a RunnableSequencechain = prompt_template | llm:

from langchain_groq import ChatGroq

from langchain.prompts import PromptTemplate

# Initialize the Groq chat model

llm = ChatGroq(

model="llama3-70b-8192",

temperature=0.3,

max_tokens=500,

)

# Create a prompt template for generating meal titles

prompt_template = PromptTemplate.from_template(

"List {n} cooking/meal titles for {cuisine} cuisine (name only)."

)

# Create a runnable chain using the pipe operator

chain = prompt_template | llm

# Run the chain with specific parameters

response = chain.invoke({

"n": 5,

"cuisine": "Italian"

})

# Print the response

print("\nPrompt: List 5 cooking/meal titles for Italian cuisine (name only).")

print("\nResponse:")

print(response.content)Getting Structured Output

What to do if we want to have structured output? For example, let’s say you are building an AI app for movie recommendations and your requirement is that all movies have a title, a genre, and a year of release:

title: str

genre: list[str]

year: intHow do you guarantee that the LLM always gives you information in this structure?

-

To solve this problem,

Langchainhas something calledOutput parsers. -

To use it, first we have to install the

pydanticlibrary that will be used to define the structure of our output model:

pip install pydantic- Then, create a

pydanticmodel for the movie object (addfrom pydantic import BaseModel, Field):

# The description helps the LLM to know what it should put in there.

class Movie(BaseModel):

title: str = Field(description="The title of the movie.")

genre: list[str] = Field(description="The genre of the movie.")

year: int = Field(description="The year the movie was released.")- Next, create a parser using the

PydanticOutputParserclass (addfrom langchain.output_parsers import PydanticOutputParser):

parser = PydanticOutputParser(pydantic_object=Movie)- Then, define a prompt template that instructs the LLM on how to format the output:

prompt_template_text = """

Response with a movie recommendation based on the query:\n

{format_instructions}\n

{query}

"""

format_instructions = parser.get_format_instructions()

prompt_template = PromptTemplate(

template=prompt_template_text,

input_variables=["query"],

partial_variables={"format_instructions": format_instructions},

)- Now, you can run the prompt and you will get more structured output:

prompt = prompt_template.format(query="A 90s movie with Nicolas Cage.")

text_output = llm.invoke(prompt)

print(text_output.content) # printed in JSON format

parsed_output = parser.parse(text_output.content)

print(parsed_output) # title='Con Air' genre=['Action', 'Thriller'] year=1997- Another way to run the prompt is to use

LangChain Expression Language (LCEL):

# Using LangChain Expression Language (LCEL)

chain = prompt_template | llm | parser

response = chain.invoke({"query": "A 90s movie with Nicolas Cage."})

print(response)Building an AI Agent

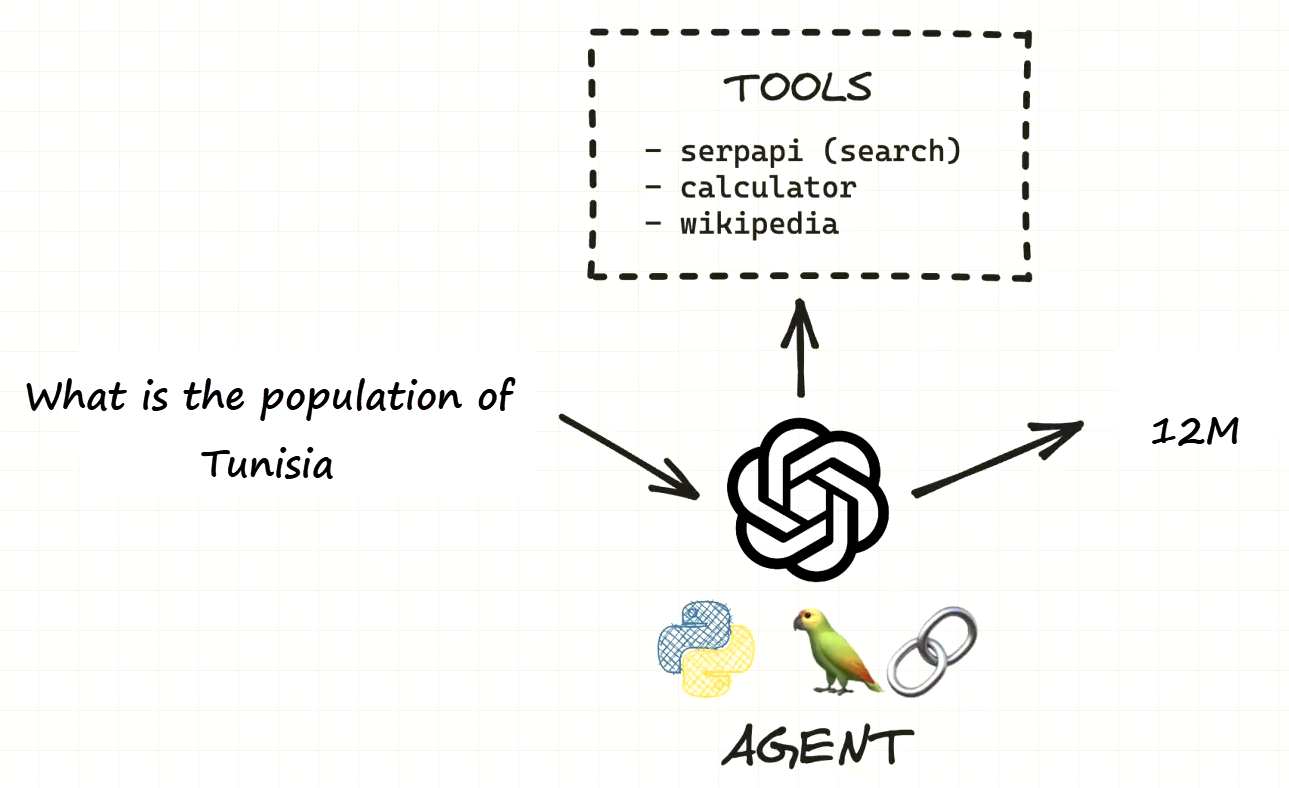

LLM agents is the most interesting concept in the Langchain toolkit.

Earlier we used a chain that execute a sequence of actions one by one.

But if we had a complex problem that require multiple non-deterministic steps to solve?

This is where an agent comes in. Rather than having a static sequence of steps, an agent instead has a set of tools that it can use. And tools are literally anything that you can implement as a function. It could be a tool to search the internet, or search Wikipedia or call external APIs. The agent then uses an LLM as a reasoning engine to figure out its next step, such as which tool to use and what information to supply to that tool. It can then use the output of one tool as input to another tool and repeat that process until it solves the initial problem.

- Here is an example:

# pip install langchain langchain_community langchain_groq duckduckgo-search

from langchain_groq import ChatGroq

from langchain_community.tools import DuckDuckGoSearchRun

from langchain.chains import LLMMathChain

from langchain.prompts import PromptTemplate

from langchain.agents import AgentExecutor, Tool

from langchain.agents.structured_chat.base import StructuredChatAgent

# Initialize the Groq chat model

llm = ChatGroq(

model="llama3-70b-8192",

temperature=0.3,

max_tokens=1024,

)

# Custom prompt for LLMMathChain

math_prompt = PromptTemplate.from_template(

"Calculate the following expression and return the result in the format 'Answer: <number>': {question}"

)

# Set up the math chain

llm_math_chain = LLMMathChain.from_llm(llm=llm, prompt=math_prompt, verbose=True)

# To get the latest information from the web

# Initialize tools

search = DuckDuckGoSearchRun()

# to garantee that the results are computed properly

calculator = Tool(

name="calculator",

description="Use this tool for arithmetic calculations. Input should be a mathematical expression.",

func=lambda x: llm_math_chain.run({"question": x}),

)

# List of tools for the agent

tools = [

Tool(

name="search",

description="Search the internet for information about current events, data, or facts. Use this for looking up population numbers, statistics, or other factual information.",

func=search.run

),

calculator

]

# Create the agent using StructuredChatAgent

agent = StructuredChatAgent.from_llm_and_tools(

llm=llm,

tools=tools

)

# Initialize the agent executor

agent_executor = AgentExecutor.from_agent_and_tools(

agent=agent,

tools=tools,

handle_parsing_errors=True

)

# Run the agent

result = agent_executor.invoke({"input": "What is the population difference between TUN and ALG?"})

print(result["output"])Other Langchain Features

Retrieval Augmented Generation (RAG)

This let’s you connect an LLM to a data source, like a database; an API, or a document, and then you will have your LLM pull information from that data source. This is useful if you want to build something like a chatbot, where you can use to ask it questions against a large data store or a large set of documents that you might have.

Memory Module

Memory module let’s you store context or chat history so that you can use it as part of your prompt and then build some thing that remembers past messages.

By Wahid Hamdi